Researchers from Berkeley, the National University of Singapore and Meta have presented a new paper demonstrating the use of Stable Diffusion to generate new model parameters.

With p-diff based on the Stable Diffusion architecture, new models can be created without the need for actual training.

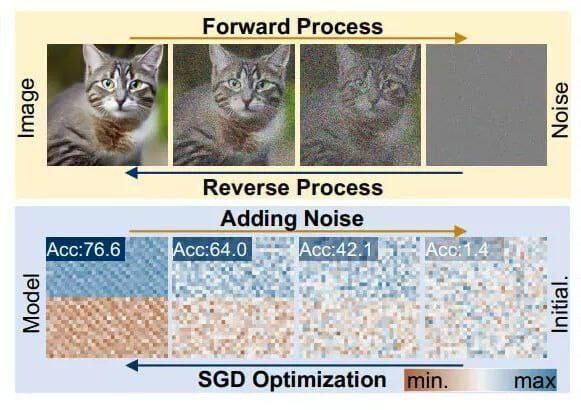

The process looks like this:

➖Stable Diffusion architecture is trained to perform a specific task.

➖The obtained weights of several models on various tasks are saved.

➖From each set of parameters, subsets are identified.

➖Subsets are converted into one-dimensional vectors.

➖The encoder is trained to restore the original characteristics from the vector.

The new approach allows the generation of model parameters from random noise, and the results show that models with the generated parameters are as good as the original models. The authors of the study confirmed that the resulting models differ from the original ones.

Model weights were created for models such as ResNet-18/50, ConvNeXt-T, ConvNeXt-B, ViT-Tiny and ViT-Base. The quality of the models was assessed using the MNIST, CIFAR-10/100 and ImageNet-1k datasets.